Cluster¶

Django Q uses Python’s multiprocessing module to manage a pool of workers that will handle your tasks. Start your cluster using Django’s manage.py command:

$ python manage.py qcluster

You should see the cluster starting

10:57:40 [Q] INFO Q Cluster-31781 starting.

10:57:40 [Q] INFO Process-1:1 ready for work at 31784

10:57:40 [Q] INFO Process-1:2 ready for work at 31785

10:57:40 [Q] INFO Process-1:3 ready for work at 31786

10:57:40 [Q] INFO Process-1:4 ready for work at 31787

10:57:40 [Q] INFO Process-1:5 ready for work at 31788

10:57:40 [Q] INFO Process-1:6 ready for work at 31789

10:57:40 [Q] INFO Process-1:7 ready for work at 31790

10:57:40 [Q] INFO Process-1:8 ready for work at 31791

10:57:40 [Q] INFO Process-1:9 monitoring at 31792

10:57:40 [Q] INFO Process-1 guarding cluster at 31783

10:57:40 [Q] INFO Process-1:10 pushing tasks at 31793

10:57:40 [Q] INFO Q Cluster-31781 running.

Stopping the cluster with ctrl-c or either the SIGTERM and SIGKILL signals, will initiate the Stop procedure:

16:44:12 [Q] INFO Q Cluster-31781 stopping.

16:44:12 [Q] INFO Process-1 stopping cluster processes

16:44:13 [Q] INFO Process-1:10 stopped pushing tasks

16:44:13 [Q] INFO Process-1:6 stopped doing work

16:44:13 [Q] INFO Process-1:4 stopped doing work

16:44:13 [Q] INFO Process-1:1 stopped doing work

16:44:13 [Q] INFO Process-1:5 stopped doing work

16:44:13 [Q] INFO Process-1:7 stopped doing work

16:44:13 [Q] INFO Process-1:3 stopped doing work

16:44:13 [Q] INFO Process-1:8 stopped doing work

16:44:13 [Q] INFO Process-1:2 stopped doing work

16:44:14 [Q] INFO Process-1:9 stopped monitoring results

16:44:15 [Q] INFO Q Cluster-31781 has stopped.

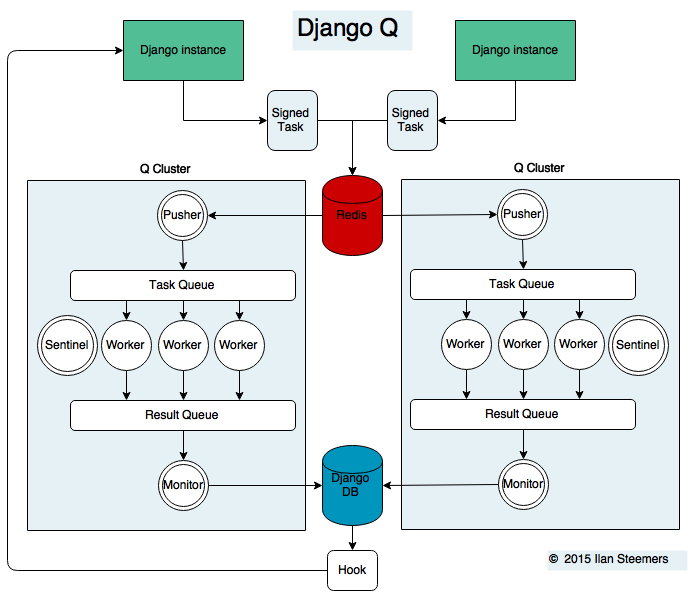

Multiple Clusters¶

You can have multiple clusters on multiple machines, working on the same queue as long as:

- They connect to the same Redis server.

- They use the same cluster name. See Configuration

- They share the same SECRET_KEY

Using a Procfile¶

If you host on Heroku or you are using Honcho you can start the cluster from a Procfile with an entry like this:

worker: python manage.py qcluster

Process managers¶

While you certainly can run a Django Q with a process manager like Supervisor or Circus it is not strictly necessary. The cluster has an internal sentinel that checks the health of all the processes and recycles or reincarnates according to your settings. Because of the multiprocessing daemonic nature of the cluster, it is impossible for a process manager to determine the clusters health and resource usage.

An example circus.ini

[circus]

check_delay = 5

endpoint = tcp://127.0.0.1:5555

pubsub_endpoint = tcp://127.0.0.1:5556

stats_endpoint = tcp://127.0.0.1:5557

[watcher:django_q]

cmd = python manage.py qcluster

numprocesses = 1

copy_env = True

Note that we only start one process. It is not a good idea to run multiple instances of the cluster in the same environment since this does nothing to increase performance and in all likelihood will diminish it. Control your cluster using the workers, recycle and timeout settings in your Configuration

Architecture¶

Signed Tasks¶

Tasks are first pickled and then signed using Django’s own django.core.signing module before being sent to a Redis list. This ensures that task

packages on the Redis server can only be executed and read by clusters

and django servers who share the same secret key.

Optionally the packages can be compressed before transport

Pusher¶

The pusher process continuously checks the Redis list for new task packages and pushes them on the Task Queue.

Worker¶

A worker process pulls a package of the Task Queue and checks the signing and unpacks the task. Before executing the task it set a timer on the Sentinel indicating its about to start work. Afterwards it the timer is reset and any results (including errors) are saved to the pacjage. Irrespective of the failure or success of any of these steps, the package is then pushed onto the Result Queue.

Monitor¶

The result monitor checks the Result Queue for processed packages and saves both failed and successful packages to the Django database.

Sentinel¶

The sentinel spawns all process and then checks the health of all workers, including the pusher and the monitor. This includes checking timers on each worker for timeouts. In case of a sudden death or timeout, it will reincarnate the failing processes. When a stop signal, the sentinel will halt the pusher and instruct the workers and monitor to finish the remaining items. See Stop procedure

Timeouts¶

Before each task execution the worker resets a timer on the sentinel and resets it again after execution. Meanwhile the the sentinel checks if the timers don’t exceed the timeout amount, in which case it will terminate the worker and reincarnate a new one.

Scheduler¶

Once a minute the scheduler checks for any scheduled task that should be starting.

- Creates a task from the schedule

- Subtracts 1 from

django_q.Schedule.repeats - Sets the next run time if there are repeats left or if its negative.

Stop procedure¶

When a stop signal is given, the sentinel exits the guard loop and instructs the pusher to stop pushing. Once this is confirmed, the sentinel pushes poison pills onto the task queue and will wait for all the workers to die. This ensures that the queue is emptied before the workers exit. Afterwards the sentinel waits for the monitor to empty the result and then the stop procedure is complete.

- Send stop event to pusher

- Wait for pusher to exit

- Put poison pills in the Task Queue

- Wait for all the workers to clear the queue and stop

- Put a poison pill on the Result Queue

- Wait for monitor to process remaining results

- Signal that we have stopped

Warning

If you force the cluster to terminate before the stop procedure has completed, you can lose tasks and their results.

Reference¶

-

class

Cluster¶ -

start()¶

Spawns a cluster and then returns

-

stop()¶

Initiates Stop procedure and waits for it to finish.

-

stat()¶

returns a

Statobject with the current cluster status.-

pid¶

The cluster process id.

-

host¶

The current hostname

-

sentinel¶

returns the

multiprocessing.Processcontaining the Sentinel.-

timeout¶

The clusters timeout setting in seconds

-

start_event¶

A

multiprocessing.Eventindicating if the Sentinel has finished starting the cluster-

stop_event¶

A

multiprocessing.Eventused to instruct the Sentinel to initiate the Stop procedure-

is_starting¶

Bool. Indicating if the cluster is busy starting up

-

is_running¶

Bool. Tells you if the cluster is up and running.

-

is_stopping¶

Bool. Shows that the stop procedure has been started.

-

has_stopped¶

Bool. Tells you if the cluster finished the stop procedure

-